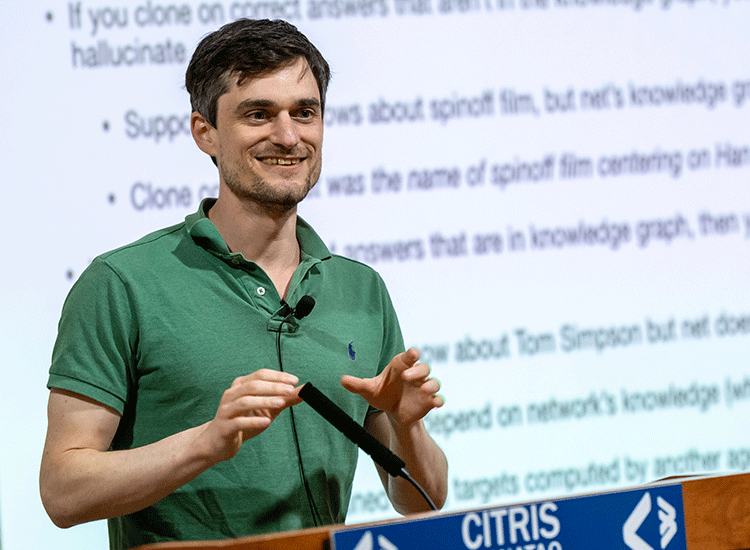

约翰 · 舒尔曼在4月19日星期三举行的 EECS 杰出讲座系列中讨论了强化学习和真实性方面的最新进展。(加州大学伯克利分校摄影: Jim Block)

John Schulman cofounded the ambitious software company OpenAI in December 2015, shortly before finishing his Ph.D. in electrical engineering and computer sciences at UC Berkeley. At OpenAI, he led the reinforcement learning team that developed ChatGPT — a chatbot based on the company’s generative pre-trained (GPT) language models — which has become a global sensation, thanks to its ability to generate remarkably human-like responses.

2015年12月,约翰 · 舒尔曼(John Schulman)在加州大学伯克利分校(UC Berkeley)完成电子工程和计算机科学博士学位前不久,与他人共同创立了雄心勃勃的软件公司 OpenAI。在 OpenAI,他领导的强化学习团队开发了基于该公司生成性预训语言模型(gPT)的聊天机器人 ChatgPT。由于该机器人能够产生非常类似人类的反应,该机器人已经引起全球轰动。

During a campus visit on Wednesday, Berkeley News spoke with Schulman about why he chose Berkeley for graduate school, the allure of towel-folding robots, and what he sees for the future of artificial general intelligence.

在周三的校园参观中,伯克利新闻采访了舒尔曼,谈到了他为什么选择伯克利大学作为研究生院,折毛巾机器人的诱惑,以及他对人工通用智能的未来的看法。

This interview has been edited for length and clarity.

这次采访经过了长度和清晰度的编辑。

Berkeley News: You studied physics as an undergraduate at the California Institute of Technology and initially came to UC Berkeley to do a Ph.D. in neuroscience before switching to machine learning and robotics. Could you talk about your interests and what led you from physics to neuroscience and then to artificial intelligence?

伯克利新闻: 你在加州理工学院读物理学本科,最初来到加州大学伯克利分校攻读神经科学博士学位,后来转向机器学习和机器人学。你能谈谈你的兴趣吗? 是什么让你从物理学转向神经科学,然后又转向人工智能?

John Schulman: Well, I am curious about understanding the universe, and physics seemed like the area to study for that, and I admired the great physicists like Einstein. But then I did a couple of summer research projects in physics and wasn’t excited about them, and I found myself more interested in other topics. Neuroscience seemed exciting, and I was also kind of interested in AI, but I didn’t really see a path that I wanted to follow [in AI] as much as in neuroscience.

约翰 · 舒尔曼: 嗯,我对理解宇宙很好奇,物理学似乎是研究宇宙的领域,我钦佩像爱因斯坦这样伟大的物理学家。但后来我做了几个暑期物理研究项目,对它们并不感兴趣,我发现自己对其他课题更感兴趣。神经科学似乎令人兴奋,我也对人工智能感兴趣,但我并没有真正看到一条[在人工智能领域]像在神经科学领域那样想走的道路。

When I came to Berkeley in the neuroscience program and did lab rotations, I did my last one with Pieter Abbeel. I thought Pieter’s work on helicopter control and towel-folding robots was pretty interesting, and when I did my rotation, I got really excited about that work and felt like I was spending all my time on it. So, I asked to switch to the EECS (electrical engineering and computer sciences) department.

当我来到伯克利的神经科学项目和做实验室轮换,我做了我的最后一个与彼得阿比尔。我认为 Pieter 在直升机控制和毛巾折叠机器人方面的工作非常有趣,当我进行旋转时,我对这项工作感到非常兴奋,感觉我把所有的时间都花在了它上面。因此,我要求转到 EECS (电气工程和计算机科学)系。

Why did you choose Berkeley for graduate school?

你为什么选择伯克利的研究生院?

I had a good feeling about it, and I liked the professors I talked to during visit day.

我对此有一种很好的感觉,而且我喜欢在访问日期间与我交谈的教授们。

I also remember I went running the day after arriving, and I went up that road toward Berkeley Lab, and there was a little herd of deer, including some baby deer. It was maybe 7:30 in the morning, and no one else was out. So, that was a great moment.

我还记得我到达后的第二天去跑步,我沿着那条路去伯克利实验室,那里有一小群鹿,包括一些小鹿。大概是早上七点半,没有其他人出来。所以,那是一个伟大的时刻。

What were some of your early projects in Pieter Abbeel’s lab?

你在 Pieter Abbeel 实验室的早期项目是什么?

There were two main threads in [Abbeel’s] lab — surgical robotics and personal robotics. I don’t remember whose idea it was, but I decided to work on tying knots with the PR2 [short for personal robot 2]. I believe [the project] was motivated by the surgical work — we wanted to do knot tying for suturing, and we didn’t have a surgical robot, so I think we just figured we would use the PR2 to try out some ideas. It’s a mobile robot, it’s got wheels and two arms and a head with all sorts of gizmos on it. It’s still in Pieter’s lab, but not being used anymore — it’s like an antique.

阿比尔的实验室有两条主线: 外科机器人和个人机器人。我不记得这是谁的主意,但是我决定用 PR2(个人机器人2的简称)来打结。我相信(这个项目)是受到外科手术工作的激励ーー我们想用打结来缝合,但我们没有外科手术机器人,所以我想我们只是想用 PR2来尝试一些想法。这是一个移动机器人,它有轮子和两只手臂,还有一个头,上面有各种各样的小玩意儿。它还在彼得的实验室里,但已经不再使用了,就像古董一样。

As a graduate student at Berkeley, you became one of the pioneers of a type of artificial intelligence called deep reinforcement learning, which combines deep learning — training complex neural networks on large amounts of data — with reinforcement learning, in which machines learn by trial and error. Could you describe the genesis of this idea?

作为伯克利大学(Berkeley)的一名研究生,你成为了一种名为深度强化学习的人工智能的先驱之一。这种智能将深度学习(在大量数据上训练复杂的神经网络)与强化学习学习(机器通过试错来学习)结合在一起。你能描述一下这个想法的起源吗?

After I had done a few projects in robotics, I was starting to think that the methods weren’t robust enough — that it would be hard to do anything really sophisticated or anything in the real world because we had to do so much engineering for each specific demo we were trying to make.

在我完成了一些机器人技术的项目之后,我开始认为这些方法不够强大ーー在现实世界中很难做出任何真正复杂的东西,因为我们必须为每个我们试图做的具体演示做大量的工程。

Around that time, people had gotten some good results using deep learning and vision, and everyone who was in AI was starting to think about those results and what they meant. Deep learning seemed to make it possible to build these really robust models by training on lots of data. So, I started to wonder: How do we apply deep learning to robotics? And the conclusion I came to was reinforcement learning.

大约在那个时候,人们已经通过深度学习和视觉获得了一些好的结果,人工智能领域的每个人都开始思考这些结果以及它们的意义。深度学习似乎可以通过对大量数据进行训练来建立这些真正健壮的模型。因此,我开始思考: 我们如何将深度学习应用于机器人技术?我得出的结论是强化学习。

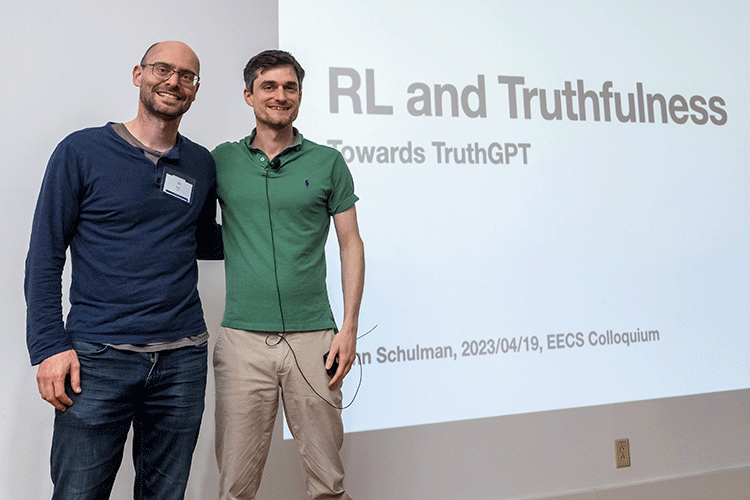

Schulman with his Ph.D. advisor Pieter Abbeel, a professor of electrical engineering and computer sciences at UC Berkeley, before the EECS Colloquium on Wednesday, April 19. (UC Berkeley photo by Jim Block)

舒尔曼和他的博士导师,加州大学伯克利分校的电气工程和计算机科学教授彼得 · 阿比尔,在 EECS 座谈会之前,4月19日,星期三。(加州大学伯克利分校摄影: Jim Block)

You became one of the co-founders of OpenAI in late 2015, while you were still finishing your Ph.D. work at Berkeley. Why did you decide to join this new venture?

你在2015年末成为 OpenAI 的联合创始人之一,当时你还在伯克利完成你的博士学位工作。你为什么决定加入这个新公司?

I wanted to do research in AI, and I thought that OpenAI was ambitious in its mission and was already thinking about artificial general intelligence (AGI). It seemed crazy to talk about AGI at the time, but I thought it was reasonable to start thinking about it, and I wanted to be at a place where that was acceptable to talk about.

我想做人工智能方面的研究,我认为 OpenAI 在它的使命上是雄心勃勃的,并且已经在考虑人工通用智能(AGI)。当时谈论 AGI 似乎有些疯狂,但我认为开始考虑它是合理的,我希望在一个地方谈论 AGI 是可以接受的。

What is artificial general intelligence?

什么是人工通用智能?

Well, it’s become a little bit vague. You could define it as AI that can match or exceed human abilities in basically every area. And seven years ago, it was pretty clear what that term was pointing at because the systems at the time were extremely narrow. Now, I think it’s a little less clear because we see that AI is getting really general, and something like GPT-4 is beyond human ability in a lot of ways.

现在有点模糊了。你可以把它定义为人工智能,它基本上在每个领域都能与人类的能力相媲美甚至超越人类。七年前,这个术语指的是什么很清楚因为当时的系统非常狭窄。现在,我认为这有点不太清楚,因为我们看到人工智能正变得越来越普遍,像 GPT-4这样的东西在很多方面超出了人类的能力。

In the old days, people would talk about the Turing test as the big goal the field was shooting for. And now I think we’ve sort of quietly blown past the point where AI can have a multi-step conversation at a human level. But we don’t want to build models that pretend they’re humans, so it’s not actually the most meaningful goal to shoot for anymore.

在过去,人们会谈论图灵测试作为一个伟大的目标,该领域的射击。现在我认为我们已经悄悄地超越了人工智能可以在人类水平上进行多步对话的阶段。但是我们不想建立假装他们是人类的模型,所以这实际上不再是最有意义的目标。

From what I understand, one of the main innovations behind ChatGPT is a new technique called reinforcement learning with human feedback (RLHF). In RLHF, humans help direct how the AI behaves by rating how it responds to different inquires. How did you get the idea to apply RLHF to ChatGPT?

据我所知,chatgPT 背后的主要创新之一是一种被称为人工反馈强化学习(rLHF)的新技术。在 RLHF 中,人类通过评估 AI 对不同询问的反应来帮助指导 AI 的行为。您是如何得到将 RLHF 应用到 ChatGPT 的想法的?

Well, there had been papers about this for a while, but I’d say the first version that looked similar to what we’re doing now was actually a paper from OpenAI, “Deep reinforcement learning from human preferences,” whose first author is actually another Berkeley alum, Paul Christiano, who had just joined the OpenAI safety team. The OpenAI safety team had worked on this effort because the idea was to align our models with human preference — try to get [the models] to actually listen to us and try to do what we want.

关于这个问题的论文已经有一段时间了,但是我想说第一个版本看起来和我们现在正在做的类似,实际上是一篇来自 OpenAI 的论文,“来自人类偏好的深层强化学习”,其第一作者实际上是另一个伯克利校友,刚刚加入 OpenAI 安全团队的保罗 · 克里斯蒂安诺。OpenAI 安全团队之所以致力于这项工作,是因为他们的想法是让我们的模型符合人类的偏好ーー试图让(模型)真正倾听我们的意见,并试图做我们想做的事情。

That first paper was not in the language domain, it was on Atari and simulated robotics tasks. And then they followed that up with work using language models for summarization. That was around the time GPT-3 was done training, and then I decided to jump on the bandwagon because I saw the potential in that whole research direction.

第一篇论文不在语言领域,而是关于 Atari 和模拟机器人的任务。然后他们接着使用语言模型进行总结。那是在 GPT-3完成训练的时候,然后我决定加入这股潮流,因为我看到了整个研究方向的潜力。

What was your reaction when you first started interacting with ChatGPT? Were you surprised at how well it worked?

当你第一次开始使用 ChatGPT 时,你的反应是什么? 你对它的工作效果感到惊讶吗?

I would say that I saw the models gradually change and gradually improve. One funny detail is that GPT-4 was done training before we released ChatGPT, which is based on GPT-3.5 — a weaker model. So at the time, no one at OpenAI was that excited about ChatGPT because there was this much stronger, much smarter model that had been trained. We had also been beta testing the chat model on a group of maybe 30 or 40 friends and family, and they basically liked it, but no one really raved about it.

我想说,我看到模型逐渐改变,逐渐改善。一个有趣的细节是,GPT-4是在我们发布 ChatGPT 之前进行培训的,ChatGPT 是基于 GPT-3.5——一个较弱的模型。所以在那个时候,OpenAI 没有人对 ChatGPT 感到兴奋,因为有这么一个更强大,更聪明的模型已经被训练过了。我们还在30到40个朋友和家人中测试了聊天模型,他们基本上都很喜欢,但是没有人真的对它赞不绝口。

So, I ended up being really surprised at how much it caught on with the general public. And I think it’s just because it was easier to use than models they had interacted with before of similar quality. And [ChatGPT] was also maybe slightly above threshold in terms of having lower hallucinations, meaning it made less stuff up and had a little more self-awareness. I also think there is a positive feedback effect, where people show each other how to use it effectively and get ideas by seeing how other people are using it.

所以,我真的很惊讶它在大众中的流行程度。我认为这只是因为它比他们之前交流过的质量相似的模型更容易使用。而且[ ChatGPT ]也可能略高于阈值,具有较低的幻觉,这意味着它编造的东西较少,有一点更多的自我意识。我也认为这是一种积极的反馈效应,人们互相展示如何有效地使用它,并通过观察其他人如何使用它来获得想法。

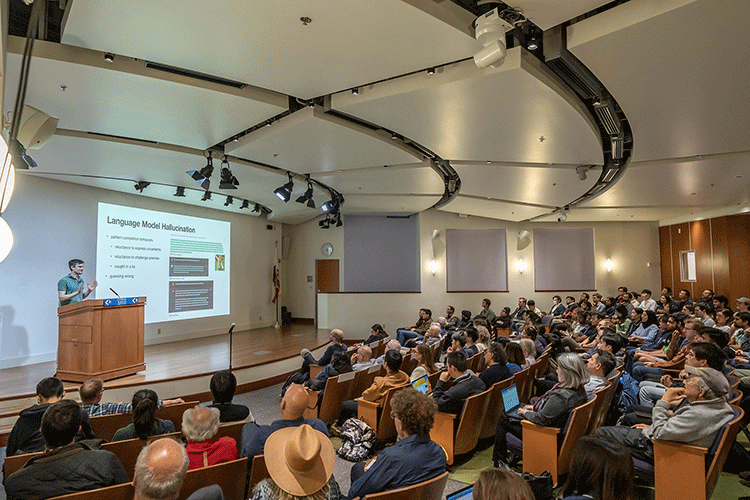

The audience for Schulman’s EECS Colloquium Distinguished Lecture filled the Banatao Auditorium in Sutardja Dai Hall. (UC Berkeley photo by Jim Block)

舒尔曼的 EECS 学术讨论会杰出讲座的听众挤满了位于苏塔尔贾大厅的 Banatao 礼堂。(加州大学伯克利分校摄影: Jim Block)

The success of ChatGPT has renewed fears about the future of AI. Do you have any concerns about the safety of the GPT models?

ChatGPT 的成功再次引发了人们对人工智能未来的担忧。您对 GPT 型号的安全性有什么担忧吗?

I would say that there are different kinds of risks that we should distinguish between. First, there’s the misuse risk — that people will use the model to get new ideas on how to do harm or will use it as part of some malicious system. And then there’s the risk of a treacherous turn, that the AI will have some goals that are misaligned with ours and wait until it’s powerful enough and try to take over.

我认为我们应该区分不同类型的风险。首先,存在误用风险ーー人们将利用该模型获得如何造成伤害的新想法,或者将其用作某些恶意系统的一部分。然后还有一个危险的转折,那就是人工智能会有一些与我们的目标不一致的目标,等到它足够强大,并试图接管。

For misuse risk, I’d say we’re definitely at the stage where there is some concern, though it’s not an existential risk. I think if we released GPT-4 without any safeguards, it could cause a lot of problems by giving people new ideas on how to do various bad things, and it could also be used for various kinds of scams or spam. We’re already seeing some of that, and it’s not even specific to GPT-4.

对于滥用风险,我认为我们肯定处在一个值得关注的阶段,尽管这不是一个世界末日。我认为如果我们在没有任何保障措施的情况下发布 GPT-4,它可能会给人们带来许多新的想法,让他们知道如何去做各种各样的坏事,它也可能被用于各种各样的骗局或垃圾邮件。我们已经看到了一些,甚至不是 GPT-4的特异性。

As for the risk of a takeover or treacherous turn, it’s definitely something we want to be very careful about, but it’s quite unlikely to happen. Right now, the models are just trained to produce a single message that gets high approval from a human reader, and the models themselves don’t have any long-term goals. So, there’s no reason for the model to have a desire to change anything about the external world. There are some arguments that this might be dangerous anyway, but I think those are a little far-fetched.

至于被接管或危险转向的风险,这肯定是我们想要非常小心的事情,但它很可能不会发生。现在,这些模型只是被训练去产生一个单一的信息,从人类读者那里得到高度的认可,而且这些模型本身并没有任何长期的目标。因此,模型没有理由希望改变外部世界的任何东西。有一些观点认为这可能是危险的,但我认为这有点牵强。

Now that ChatGPT has in many ways passed the Turing test, what do you think is the next frontier in artificial generalized intelligence?

既然 ChatGPT 已经在很多方面通过了图灵测试,您认为人工广义智能的下一个前沿领域是什么?

I’d say that AIs will keep getting better at harder tasks, and tasks that used to be done by humans will gradually fall to being able to be done by a model perfectly well, possibly better. Then, there will be questions of what should humans be doing — what are the parts of the tasks where the humans can have more leverage and do more work with the help of models? So, I would say it’ll just be a gradual process of automating more things and shifting what people are doing.

我想说,人工智能将在更难的任务上不断取得进步,而过去由人类完成的任务将逐渐变得能够由一个模型完美地完成,甚至可能更好。然后,就会出现这样的问题: 人类应该做些什么? 在哪些方面的任务中,人类可以在模型的帮助下拥有更大的影响力,并做更多的工作?所以,我认为这将是一个渐进的过程,使更多的事情自动化,改变人们正在做的事情。

Audience members took selfies with Schulman after the talk. (UC Berkeley photo by Jim Block)

演讲结束后,观众与舒尔曼自拍。(加州大学伯克利分校吉姆 · 布洛克摄)