作者探索了多智能体领域的深度强化学习方法,首先分析传统算法在多智能体情况下的难度:Q-learning 受到环境固有的非平稳性的挑战,而策略梯度受到随着智能体数量增加而增加的方差的影响。 然后提出了一种对 actor-critic 方法的改进,该方法考虑了其他智能体的动作策略,并且能够成功地学习需要复杂的多代理协调的策略。 此外作者引入了一种训练方案,该方案利用针对每个智能体的一组策略,从而产生更强大的多智能体策略, 与合作和竞争场景中的现有方法相比,该智能体群体能够发现各种物理和信息协调策略。

论文地址: https://arxiv.org/pdf/1706.02275.pdf

Github地址: https://github.com/philtabor/Multi-Agent-Deep-Deterministic-Policy-Gradients

环境地址:https://github.com/openai/multiagent-particle-envs

B站视频:

视频时间线:

time stamps:

0:00 Intro

02:28 Abstract

03:18 Paper Intro

08:13 Related Works

09:02 Markov Decision Processes

10:35 Q Learning Explained

15:25 Policy Gradients Explained

19:14 Why Multi Agent Actor Critic is Hard

20:15 DDPG Explained

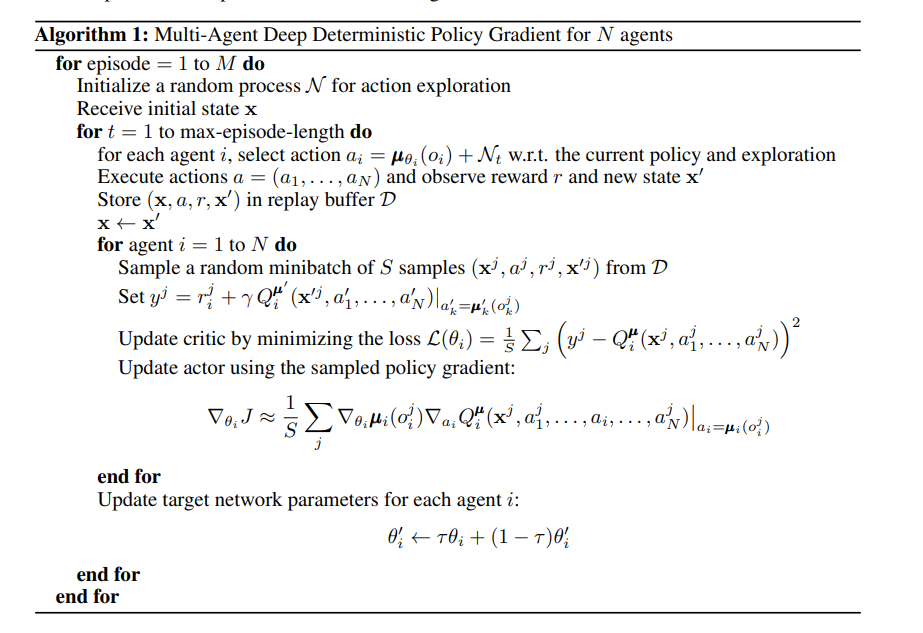

24:21 MADDPG Explained

29:11 Experiments

37:57 How to Implement MADDPG

40:54 MADDPG Algorithm

42:23 Hyperparameters for MADDPG

43:42 Multi Agent Particle Environment

45:09 Environment Install & Testing

55:37 Coding the Replay Buffer

01:07:34 Actor & Critic Networks

01:15:36 Coding the Agent

01:26:05 Coding the MADDPG Class

01:39:23 Coding the Utility Function

01:40:13 Coding the Main Loop

01:46:58 Moment of Truth

01:52:09 Testing on Physical Deception

01:55:48 Conclusion & Results