Cognitive Mapping and Planning for Visual Navigation (<a id='CMP'>CMP</a>)

Saurabh Gupta, Varun Tolani, James Davidson, Sergey Levine, Rahul Sukthankar, Jitendra Malik <br>

CVPR, 2017. [Paper]

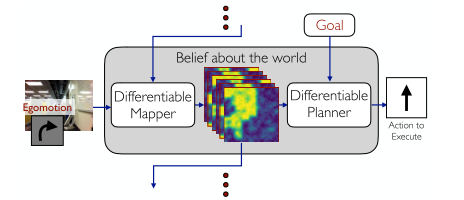

Two key ideas:

a unified joint architecture for mapping and planning, such that the mapping is driven by the needs of the task;

a spatial memory with the ability to plan given an incomplete set of observations about the world.

CMP constructs a top-down belief map of the world and applies a differentiable neural net planner to produce the next action at each time step.

Network architecture

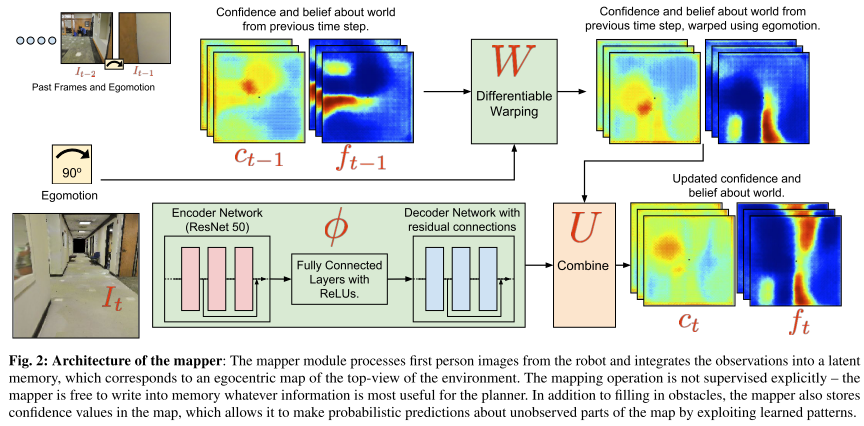

Architecture of the mapper

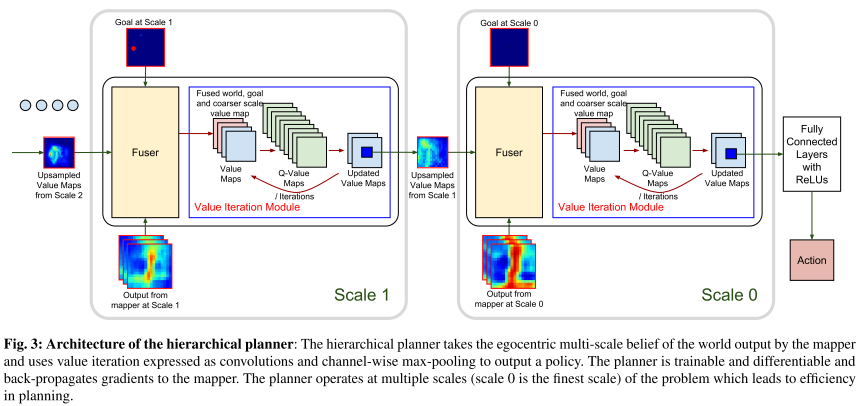

Architecture of the hierarchical planner

Visual Semantic Navigation using Scene Priors (<a id='Scene_Priors'>Scene Priors</a>)

Wei Yang, Xiaolong Wang, Ali Farhadi, Abhinav Gupta, Roozbeh Mottaghi <br>

ICLR, 2019. [Paper]

They address navigation to novel objects or navigating in unseen scenes using scene priors, as a human does.

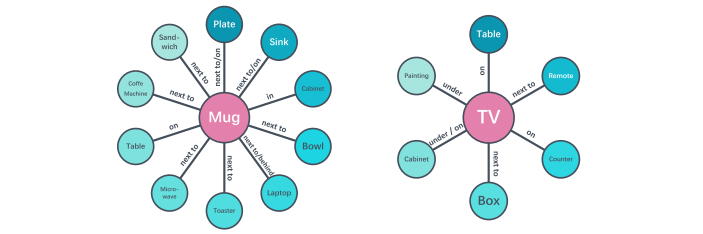

Scene Priors

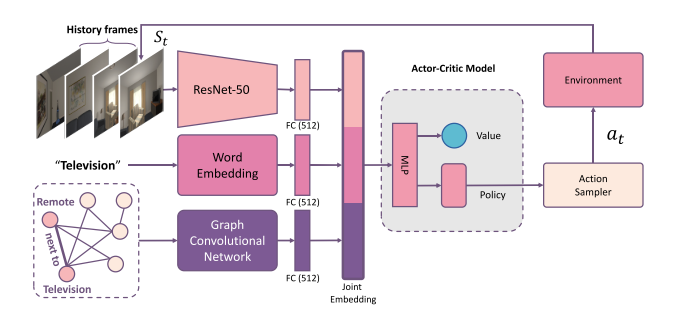

Architecture

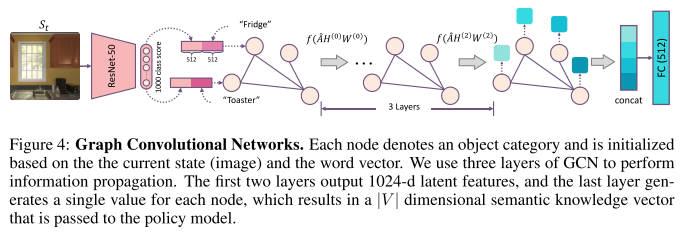

GCN for relation graph embedding

Visual Representations for Semantic Target Driven Navigation (<a id='VR'>VR</a>)

Arsalan Mousavian, Alexander Toshev, Marek Fiser, Jana Kosecka, Ayzaan Wahid, James Davidson <br>

ICRA, 2019. [Paper] [Code]

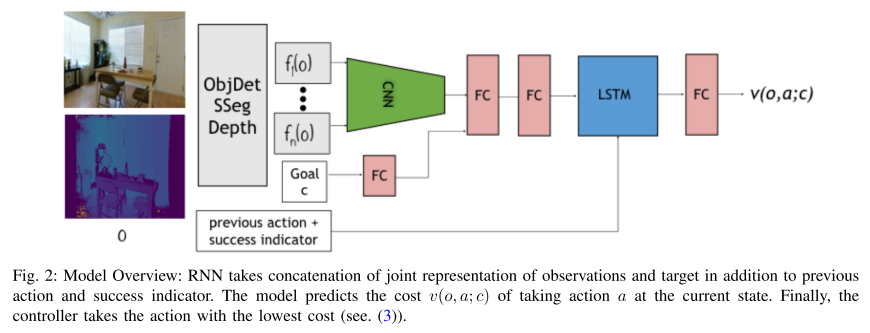

This paper focuses on finding a good visual representation.

Learning to Learn How to Learn: Self-Adaptive Visual Navigation using Meta-Learning (SAVN)

Mitchell Wortsman, Kiana Ehsani, Mohammad Rastegari, Ali Farhadi, Roozbeh Mottaghi <br>

CVPR, 2019. [Paper] [Code] [Website]

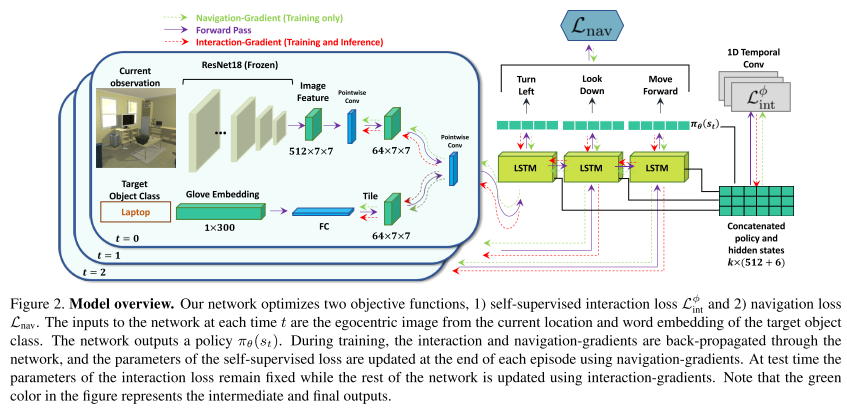

This paper uses Meta-reinforcement learning to construct an interaction loss for self-adaptive visual navigation.

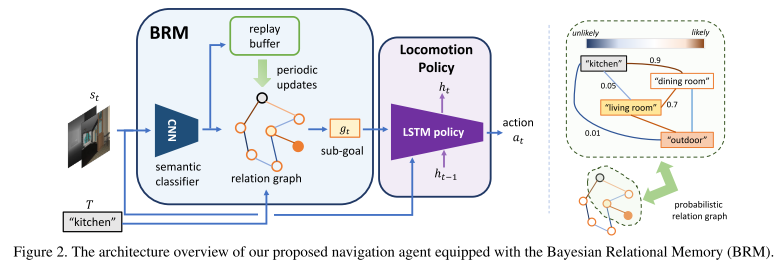

Bayesian Relational Memory for Semantic Visual Navigation (BRM)

Yi Wu, Yuxin Wu, Aviv Tamar, Stuart Russell, Georgia Gkioxari, Yuandong Tian <br>

ICCV, 2019. [Paper] [Code]

Construct a probabilistic relation graph to learn the relationship or a topological memory of the house layout.

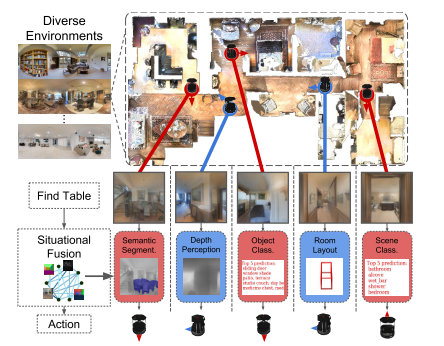

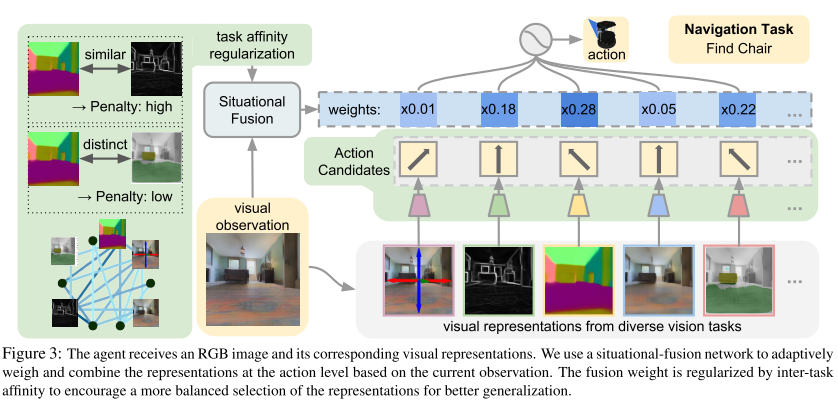

Situational Fusion of Visual Representation for Visual Navigation (SF)

William B. Shen, Danfei Xu, Yuke Zhu, Leonidas J. Guibas, Li Fei-Fei, Silvio Savarese <br>

ICCV, 2019. [Paper]

This paper aims at fusing multiple visual representations, such as Semantic Segment, Depth Perception, Object Class, Room Layout, and Scene Class.

They develop an action-level representation fusion scheme, which predicts an action candidate from each representation and adaptively consolidate these action candidates into the final action.

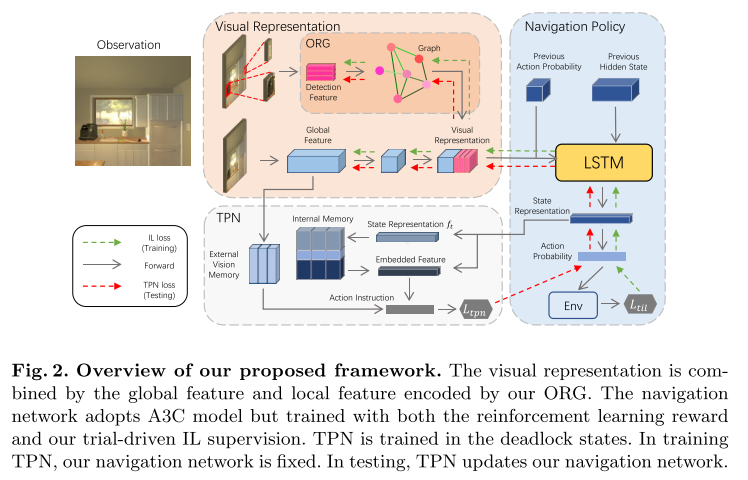

Learning Object Relation Graph and Tentative Policy for Visual Navigation (ORG)

Heming Du, Xin Yu, Liang Zheng <br>

ECCV, 2020. [Paper]

Aiming to learn informative visual representation and robust navigation policy, this paper proposes three complementary techniques, object relation graph (ORG), trial-driven imitation learning (IL), and a memory-augmented tentative policy network (TPN).

ORG improves visual representation learning by integrating object relationships;

Both Trial-driven IL and TPN underlie robust navigation policy, instructing the agent to escape from deadlock states, such as looping or being stuck;

IL is used in training, TPN for testing.

Target driven visual navigation exploiting object relationships (MJOLNIR)

Yiding Qiu, Anwesan Pal, Henrik I. Christensen <br>

arxiv, 2020. [Paper]

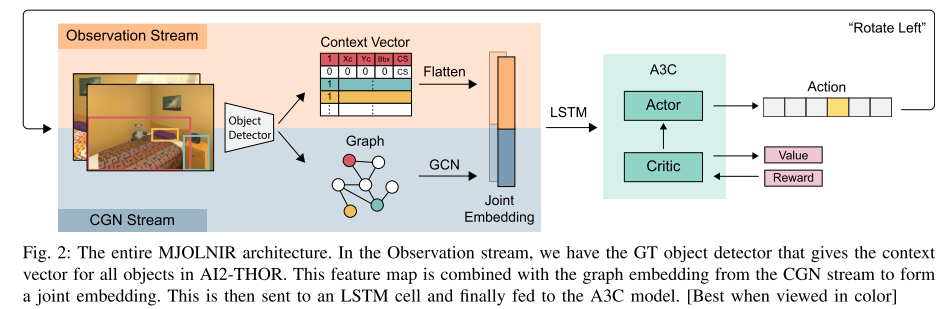

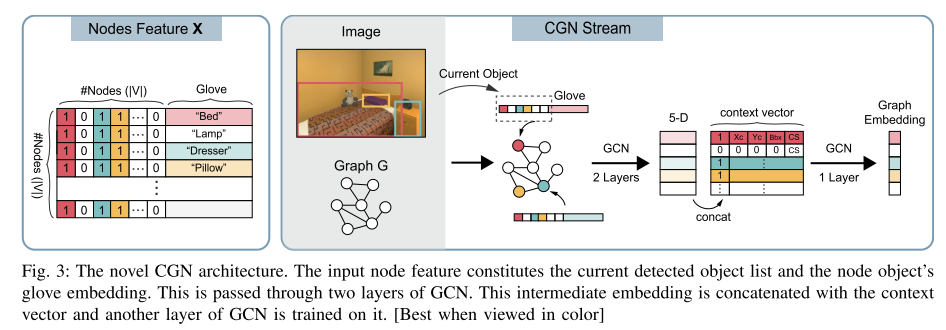

They present Memory-utilized Joint hierarchical Object Learning for Navigation in Indoor Rooms (MJOLNIR)1, a target-driven visual navigation algorithm, which considers the inherent relationship between target objects, along with the more salient parent objects occurring in its surrounding.

MJOLNIR architecture

The novel CGN architecture

Improving Target-driven Visual Navigation with Attention on 3D Spatial Relationships (Attention 3D)

Yunlian Lv, Ning Xie, Yimin Shi, Zijiao Wang, and Heng Tao Shen <br>

arxiv, 2020. [Paper]

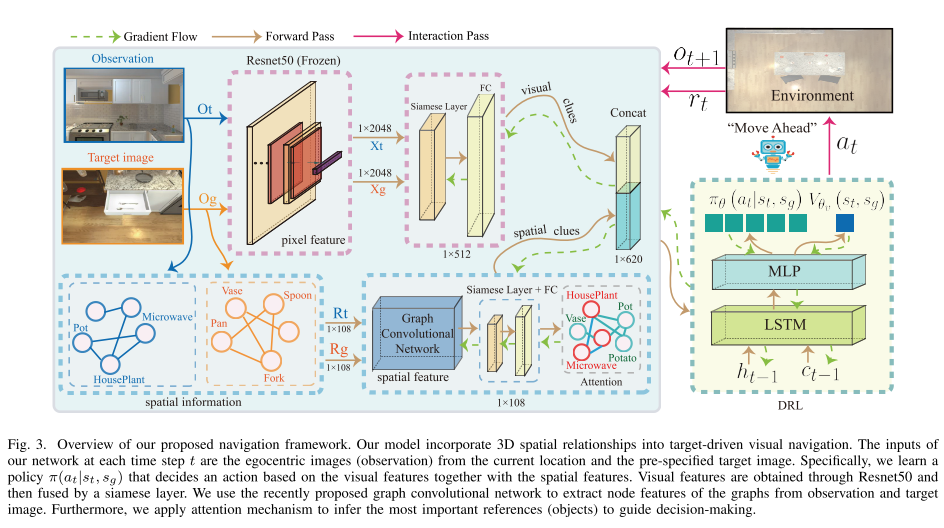

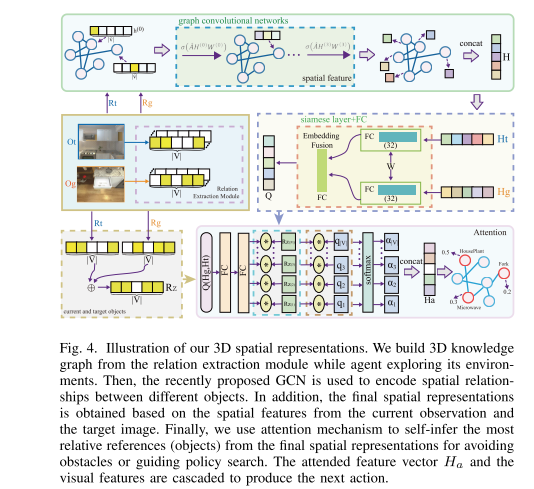

To address the generalization and automatic obstacle avoidance issues, we incorporate two designs into the classic DRL framework: attention on 3D knowledge graph (KG) and target skill extension (TSE) module.

visual features and 3D spatial representations to learn navigation policy;

TSE module is used to generate sub-targets that allow the agent to learn from failures.

Framework

3D spatial representations

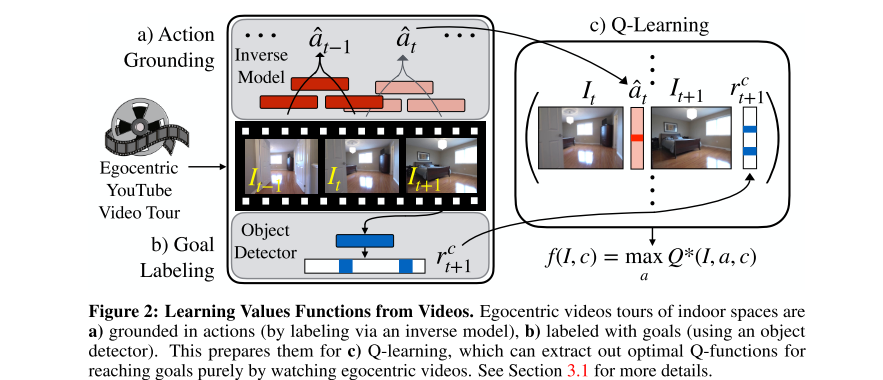

Semantic Visual Navigation by Watching YouTube Videos (YouTube)

Matthew Chang, Arjun Gupta, Saurabh Gupta <br>

arXiv, 2020. [Paper] [Website]

This paper learns and leverages such semantic cues for navigating to objects of interest in novel environments, by simply watching YouTube videos. They believe that these priors can improve efficiency for navigation in novel environments.

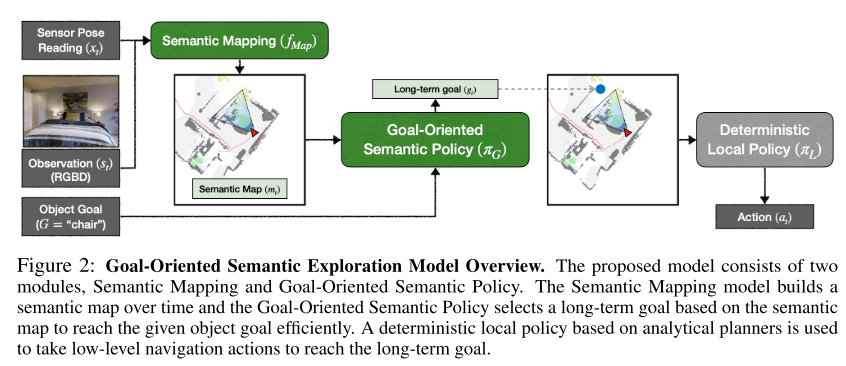

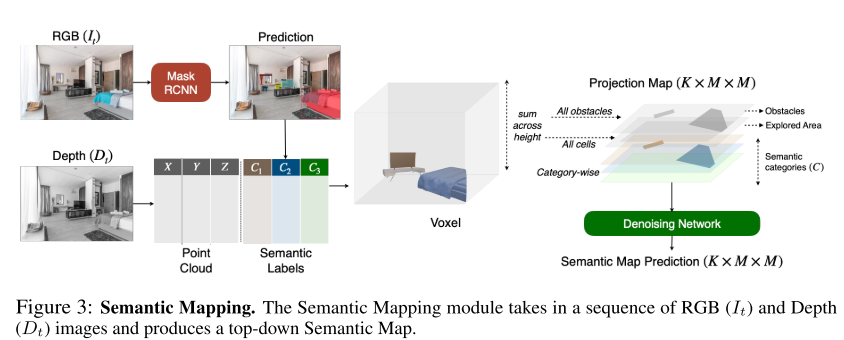

Object Goal Navigation using Goal-Oriented Semantic Exploration (SemExp)

Devendra Singh Chaplot, Dhiraj Gandhi, Abhinav Gupta*, Ruslan Salakhutdinov <br>

arXiv, 2020. [Paper] [Website]

Win CVPR2020 Habitat ObjectNav Challenge

They propose a modular system called, ‘Goal- Oriented Semantic Exploration (SemExp)’ which builds an episodic semantic map and uses it to explore the environment efficiently based on the goal object category.

It builds top-down metric maps, which adds extra channels to encode semantic categories explicitly;

Instead of using a coverage maximizing goal-agnostic exploration policy based only on obstacle maps, we train a goal-oriented semantic exploration policy that learns semantic priors for efficient navigation.

Framework

Semantic Mapping

ObjectNav Revisited: On Evaluation of Embodied Agents Navigating to Objects

Dhruv Batra, Aaron Gokaslan, Aniruddha Kembhavi, Oleksandr Maksymets, Roozbeh Mottaghi, Manolis Savva, Alexander Toshev, Erik Wijmans <br>

arXiv, 2020. [Paper]

This paper is not a research paper. They summarize the ObjectNav task and introduce popular datasets (Matterport3D, AI2-THOR) and Challenges (Habitat 2020 Challenge Habitat, RoboTHOR 2020 Challenge RoboTHOR).